Most museums offer their audio guide in three to five languages. English, plus the local language, plus one or two others based on gut feeling about who visits. Maybe French. Maybe Mandarin. The decision usually gets made once, during procurement, and then stays locked in for years.

This made sense when every language cost $5,000 to $15,000 to produce. It doesn't make sense anymore.

The old math

The traditional calculation works like this. You commission a script. A translator adapts it for each target language, adjusting cultural references, sentence rhythm, and tone (not just swapping words). Voice talent records in a studio. Someone does QA on every track. The whole process takes weeks per language, and the bill adds up fast.

At $5K-$15K per language, a five-language guide costs $25K-$75K just for translation and recording. That's before you touch hardware, app development, or content updates. So museums agonize over the decision. Is Japanese worth it? We get some Japanese visitors, but is it $10K worth of Japanese visitors? What about Korean? Portuguese?

The result: committees, surveys, spreadsheets, and ultimately a compromise. You pick the languages you can afford, knowing full well that thousands of visitors each year will get nothing.

Why the old framework is wrong now

AI-generated audio has collapsed the cost of adding a language to near zero. Not "cheaper." Not "more affordable." Near zero.

The reason is simple. A real-time AI system doesn't pre-record anything. It generates speech on the fly, in whatever language the visitor selects. The same content pipeline that produces your English guide produces your Japanese guide, your Portuguese guide, your Basque guide. No translator. No voice talent. No studio. No per-language QA cycle.

The question flips entirely. Instead of "can we afford French?" you're asking "why wouldn't we offer every language our visitors speak?"

We've seen museums go from 3 languages to 40+ overnight. Not because they suddenly got a bigger budget, but because the constraint disappeared.

Figuring out which languages your visitors actually speak

Even when adding languages is free, you still want to know your audience. Not to pick winners and losers, but to prioritize rollout, tailor marketing materials, and understand engagement patterns.

Four places to look:

- Ticket sales by nationality. If your booking system captures country of origin, this is your cleanest signal. Sort by volume, and you'll likely find a long tail that surprises you.

- Visitor surveys. Most museums run post-visit surveys. If yours asks about language preference or country of origin, you already have data sitting in a spreadsheet somewhere.

- Google Analytics geo data. Your website traffic by country correlates with actual visitation. It's imperfect (not everyone who browses your site visits in person) but it reveals demand you might not see in ticket data, especially from countries where advance booking is less common.

- Tourism board statistics. Your city or region's tourism office publishes visitor demographics annually. Cross-reference this with your own data for a fuller picture.

When we onboard partners, one of the first things we look at together is this data. The patterns are almost always the same: a few dominant languages that everyone expects, followed by a long tail of 10-20 smaller language groups that collectively represent 15-30% of visitors.

The long tail is the whole point

A number that should bother you. Say 2% of your visitors are Japanese speakers. At a museum with 200,000 annual visitors, that's 4,000 people per year walking through your galleries with no guide in their language.

Under the old model, 4,000 visitors doesn't justify a $10K investment. Under the new model, those 4,000 people get a native-quality Japanese guide for effectively nothing.

Multiply that across every small language group. The 1.5% who speak Korean. The 3% who speak Italian. The 0.8% who speak Thai. Individually, none of them clear the old ROI bar. Together, they might represent 20% of your total audience, all currently underserved.

The shift in thinking matters most here. A language strategy isn't about picking winners. It's about removing an artificial constraint that forced you to pick winners in the first place.

Not all translations are equal

One legitimate concern with AI translation: quality. And it's a fair concern, because bad translation is worse than no translation. A visitor hearing stilted, literally-translated narration in their language will trust the museum less than if they'd just listened in English.

The problem is that most machine translation (the kind you get from running text through a generic API) sounds exactly like that. Stiff. Literal. Grammatically correct but culturally dead. The training data for most translation models is itself machine-translated, which creates a feedback loop of mediocrity.

Implementation matters here. Musa supports 40+ languages at what we'd call native quality. That means it sounds like someone who actually speaks this language wrote it: regional accents, natural idioms, appropriate register. A Guatemalan museum can have its guide speak with local slang. A Basque cultural center can offer Basque that sounds like Basque, not like translated Spanish.

The difference comes from prompt orchestration, layers of instructions that ensure the AI doesn't just translate words but adapts the entire delivery for each language. Without that orchestration layer, you get generic output. With it, you get something that respects the language and the culture behind it.

Real-time means never outdated

There's a second-order benefit to real-time translation that's easy to miss. When your guide is pre-recorded, updating content means re-recording. In every language. If you change the description of a gallery, update an attribution, or add a new temporary exhibit, the update has to flow through the entire translation and recording pipeline again.

In practice, multilingual guides are almost always slightly outdated. The English version gets updated first, and the other languages catch up weeks or months later, if they get updated at all.

A real-time system sidesteps this entirely. Change the underlying content, and every language reflects the change immediately. No re-translation. No re-recording. Your temporary exhibition guide works in 40 languages from day one.

The pricing question

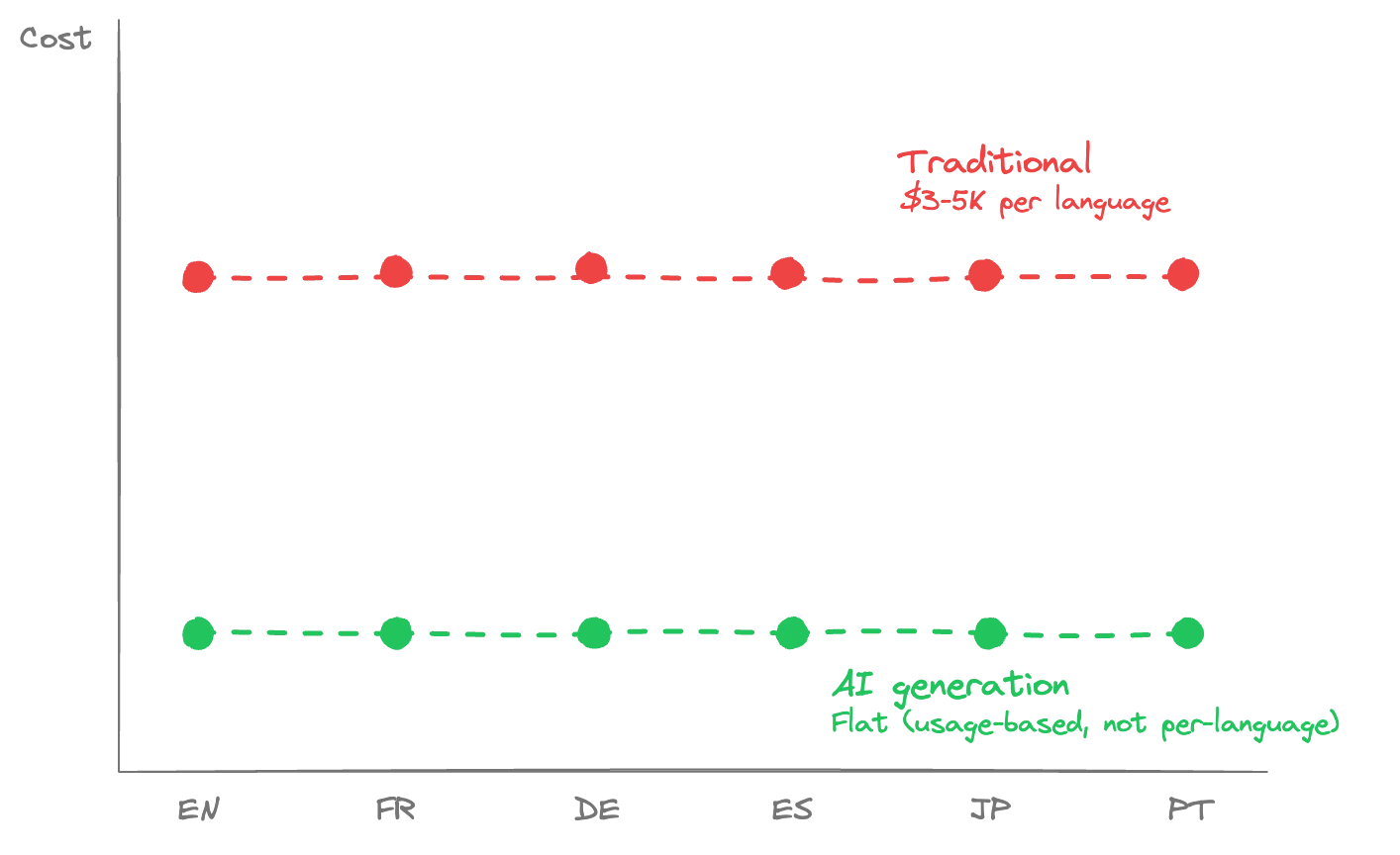

If you're evaluating multilingual options, you'll quickly notice that most audio guide platforms charge per language. Sometimes it's a flat fee per language, sometimes a percentage uplift, sometimes a separate "multilingual module" at an extra tier. The pricing model assumes that languages are features to be metered.

We think that's wrong. Musa doesn't charge for additional languages. We don't charge for features at all. Our pricing covers the cost of AI generation, and that's it. Offer 2 languages or 42, the price is the same.

The philosophy behind this: restricting access to software that costs almost nothing to run per additional language is artificial scarcity. It punishes museums for serving their visitors. We'd rather cover our generation costs and make every language available out of the box.

In practice, this makes Musa roughly 10 to 100 times cheaper than the closest competitors attempting similar AI-driven multilingual guides. That's not a typo. The gap is that large because we're not marking up a commodity.

Making the case internally

If you need to justify multilingual expansion to your board or director, the argument is simpler than it used to be.

Old pitch: "We should spend $40K to add four languages because international visitors represent X% of revenue." This requires detailed ROI projections, budget committee approval, and usually results in a watered-down version of what you actually need.

New pitch: "We can serve every visitor in their own language at no additional cost. Here's the data on who's currently underserved." The conversation moves from budget to impact. How many visitors are we currently failing? What does that do to our satisfaction scores, our reviews, our reputation as an internationally welcoming institution?

For publicly funded museums, there's often a mandate around accessibility and inclusion. Multilingual access fits squarely within that mandate. For privately funded museums, the reputation and review impact is the lever. A Japanese visitor who gets a native-quality guide is far more likely to leave a positive review than one who struggled through an English version.

Where to start

If you're currently offering 2-3 languages and want to expand, here's a practical sequence:

- Pull your visitor data. Nationality from ticket sales, geo from analytics, language from surveys. Build a ranked list.

- Launch with everything you can. If your platform supports it, don't stage-gate languages. Turn them all on. The marginal cost is zero, so there's no reason to phase.

- Promote actively. A language option does nothing if visitors don't know it exists. Update your signage, your website, your booking confirmation emails. "Available in 40+ languages" is a strong marketing line.

- Watch the analytics. Once you have multilingual usage data, you'll see exactly which languages get picked, how engagement compares across languages, and where to focus promotional efforts.

- Iterate on quality. Pay attention to feedback from native speakers in your top 5-10 languages. The AI is good, but specific cultural references or local terminology might need tuning.

The museums we work with that get the most out of multilingual tend to treat it not as a technical feature but as an institutional value. It shows up in their marketing, their signage, their staff training. The technology makes it possible. The culture makes it work.

This isn't a feature decision anymore

For decades, "how many languages?" was a budget question. How much can we spend, and which languages give us the best return? That framing forced museums into uncomfortable compromises, leaving large portions of their audience without support.

That constraint is gone. The technology exists to offer every visitor a guide in their language, at native quality, updated in real time, for negligible additional cost. The question left is whether your institution wants to be the kind of place that serves everyone who walks through the door, or just the ones who happen to speak the right three languages.

If you're rethinking your language strategy, let's walk through the options.