Here's something every museum professional has seen: a visitor walks in energized, reads every label in the first three rooms, then starts drifting. By the fifth room, they're scanning. By the seventh, they're looking for the exit or the cafe. The collection hasn't gotten worse. Their brain is full.

This is museum fatigue, and it's not a character flaw. After 60 to 90 minutes of processing new visual and textual information, human attention degrades measurably. People absorb less, retain less, and enjoy less. Studies on visitor behavior have documented this for decades — it's one of the most consistent findings in museum research.

Audio guides are supposed to help. They offload the reading, provide context, and guide attention. But most audio guides make the problem worse. They demand the same level of engagement at stop twenty-two that they demanded at stop one. Every stop is the same format, the same depth, the same interaction. The guide doesn't know the visitor is running out of cognitive fuel, and it doesn't care.

That's a design failure.

The single-mode trap

Traditional audio guides offer one interaction model: press play, listen. The content was scripted at a fixed depth and pacing. A visitor who wanted more context couldn't get it. A visitor who wanted less had to hit skip and lose the thread entirely.

Most AI audio guides haven't actually fixed this. The chatbot-style products (AskMona is the most visible example) replaced "press play" with "ask a question." That sounds like progress. It isn't, really. The interaction model is still a single mode: stand in front of a painting, ask the AI something, get a response, ask again, move on, repeat.

This is exhausting. The visitor has to generate every interaction. They need to think of questions, formulate them, evaluate the answers, decide what to ask next. For the first three stops, that's interesting. By stop ten, it's work. The cognitive demand is actually higher than a traditional guide because the visitor is now responsible for driving the conversation, not just receiving it.

Think about the difference between watching a documentary and doing a research interview. Both involve learning. One requires almost no effort from the viewer. The other requires constant active participation. Most museum visitors, most of the time, want something closer to the documentary. They want to be guided.

What cognitive load actually means here

Cognitive load theory comes from educational psychology. The basic idea: working memory is limited. When you overload it, learning drops and frustration rises. Too much new information, too many decisions, too complex an interface.

In a museum context, visitors are already processing a lot. Visual information from the objects. Spatial orientation in an unfamiliar building. Social awareness of other visitors. Physical fatigue from standing and walking. The audio guide adds another channel on top of all that.

The question isn't whether an audio guide adds cognitive load. It always does. The question is whether it adds more than it removes. A good guide reduces the total load by answering the questions a visitor would otherwise have to figure out themselves: what am I looking at, why does it matter, what should I see next. A bad guide adds load by demanding attention, forcing interaction, or delivering more information than the visitor can process.

The best guides do something harder. They adjust.

Three tiers of engagement

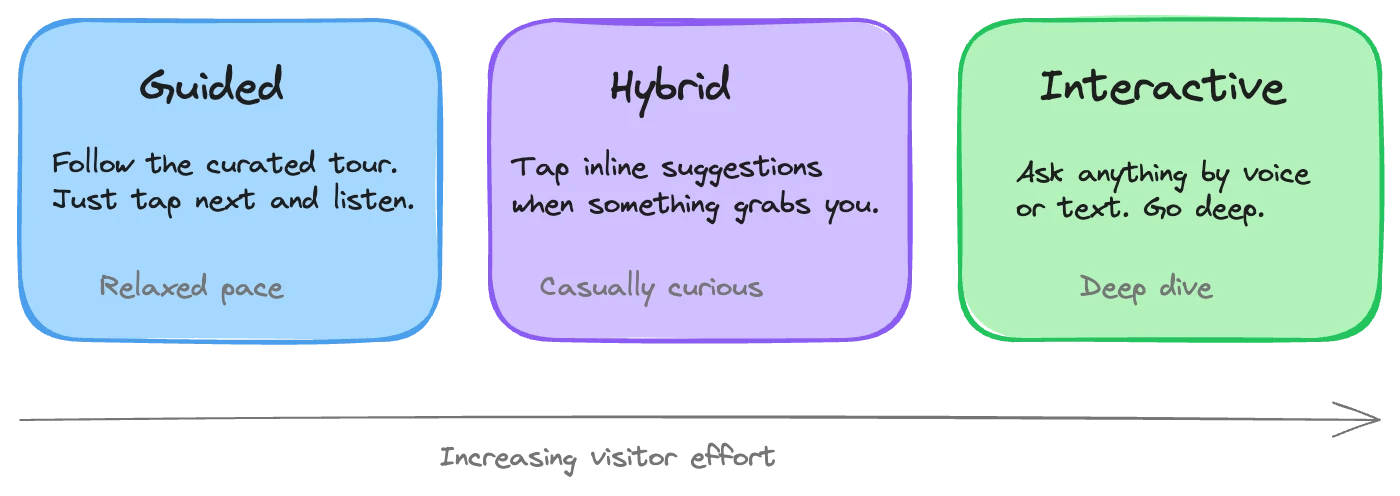

This is the model we've built at Musa, and it's the thing our competitors don't do. The idea itself is straightforward. But doing it well requires a system that handles both curated tours and real-time interaction. Most products do one or the other.

Here's how the three tiers work in practice.

Tier one: passive following. The visitor opens the guide, starts a tour, and just follows it. The AI narrates each stop on the curated path. The visitor taps "next" when they're ready to move on. That's it. No decisions, no typing, no questions. The museum designed this path. They selected the stops, wrote the narrative arc, set the tone and depth. The visitor simply walks through it.

This is the mode for tired visitors, for people who just want a pleasant experience without work, for the visitor who's on their second hour and has burned through their curiosity budget. It works because the tour was curated by people who know the collection. The visitor doesn't need to do anything except show up and listen.

Most traditional audio guides only offer this tier. The good news: it's what the majority of visitors want most of the time. The bad news: it's all they get, even when they want more.

Tier two: guided discovery. While the AI narrates a stop, it mentions related things: other works in the room, a connection to something two galleries away, a related exhibition. These aren't just verbal references. They appear as buttons on the screen. Tap one and you're taken to that tangent. Don't tap, and the tour continues as planned.

This is the middle ground. The visitor doesn't have to think of questions. The guide surfaces the interesting connections and lets the visitor decide whether to follow them. Compare someone saying "any questions?" (which puts the burden on you) with someone saying "that technique also shows up in the painting behind you, if you're curious" (which gives you a specific, low-effort option).

The cognitive load here is minimal. Read a suggestion, decide yes or no. No open-ended thinking required. But the visitor gets to shape their experience based on what actually catches their attention in the moment.

Tier three: full interaction. The visitor taps the microphone and asks whatever they want. They can speak or type. They can ask about something the guide didn't mention, request more detail on what it just said, or type the name of an exhibit across the museum and navigate there directly.

This is the mode for the deeply engaged visitor who showed up with specific interests or the art history student who wants to go deep on a particular technique. It's also useful for practical questions: where's the bathroom, how do I get to the Impressionists section, is there a cafe.

The point is that this tier exists alongside the other two. A visitor can follow the curated tour passively for twenty minutes, notice a suggestion that intrigues them and tap it, then ask a voice question about something that caught their eye. All in the same visit, no mode-switching friction.

Why this matters more than it sounds

The three-tier model isn't a feature list. It's a design philosophy: let visitors choose their depth at each moment.

A visitor who starts at tier three might drop to tier one after an hour. That's not failure. That's a guide adapting to the human using it. A visitor who starts at tier one might notice a suggestion in tier two that pulls them into a twenty-minute deep dive. That's engagement you'd never get from a static guide.

Museum fatigue is real, but it's not binary. People don't go from "fully engaged" to "done" in one step. They oscillate. Energy rises when something catches their eye, drops when they've been standing too long, rises again when they enter a new room. A guide that matches these fluctuations keeps people in the museum longer and gives them a better experience than one that demands consistent engagement they can't sustain.

We've watched this happen across partner sites. Visitors who would have left after fifty minutes with a traditional guide stay for seventy or eighty with a tiered system. They're often consuming less content per minute, not more. The guide just isn't fighting their energy level.

The competitor problem

AI audio guides currently fall into three categories.

Category one: AI-assisted creation. These tools use AI to help museums write scripts, translate content, or generate voiceover. The output is still a traditional guide: fixed narration, played linearly. The AI is in the production pipeline, not in the visitor's pocket. This speeds up content creation but doesn't change the visitor experience at all.

Category two: AI dialogue. Chatbot-style products where visitors ask questions and get answers about individual objects. No tour structure, no narrative arc, no curation. The visitor drives everything. As I described above, this is tier three only. Making every visitor operate at tier three for an entire visit is a recipe for disengagement.

Category three: full AI guide platforms. This is where Musa sits. Both the creation side (museums design tours, personas, narrative structures) and the delivery side (real-time generation, multi-language, visitor interaction) are handled in one system. The curated tour and the interactive dialogue aren't separate products bolted together. They're the same thing, operating on a spectrum.

The gap between category two and category three is large, and most visitors will feel it within ten minutes. Walking into a museum with a category-two guide is like walking into a library with a brilliant librarian but no catalog, no shelves, no reading lists. You can ask the librarian anything and get a great answer. But you have to know what to ask. You have to do all the work of figuring out where to go and what to look at. Most people don't want that. They want the curated experience with the option to go off-script when something grabs them.

Designing tours for variable engagement

If you're building audio guide content, on Musa or any other platform, here's how to think about accommodating different engagement levels.

Build the passive path first. Design a tour that works if the visitor never interacts beyond tapping "next." This is your baseline. It should have a narrative arc, a sensible sequence through the space, and enough depth at each stop that passive listeners feel they've had a real experience. This is the tour for the tired parent, the reluctant companion, the visitor on their second museum of the day.

Layer in connection points. At each stop, identify two or three natural tangents. A related work nearby. A technique that appears elsewhere in the collection. A historical connection to another period. These become your inline suggestions, the tier-two hooks that give curious visitors somewhere to go without making passive visitors feel like they're missing out.

Leave room for questions. Write your stop content knowing that some visitors will want to go deeper. Don't try to cram everything into the narration. The AI can handle follow-up questions, so your curated content can focus on the most important interpretation and let the visitor pull more if they want it. Shorter stops with rich underlying data are better than long stops that try to cover everything.

Think about energy pacing. Don't front-load your most demanding content. If the first five stops require intense focus and the last five are lightweight, you've matched the wrong content to the wrong energy levels. Alternate between high-density stops and lighter ones. Put your most emotionally resonant or visually striking stop in the second half, when visitors need a reason to keep going.

Test with real visitors, not just curators. Curators are deeply knowledgeable and deeply atypical as visitors. A stop that feels "too simple" to a curator might be exactly right for a visitor encountering the collection for the first time. Watch how real visitors use the guide across its full runtime, not just the first ten minutes.

The underlying principle

Audio guides have historically treated visitors as a single type: someone who wants to be talked at for a fixed amount of time about a fixed set of things. Every visitor got the same thing. The only variable was whether they pressed play.

That was a technology constraint. Recorded guides couldn't adapt. Digital guides on dedicated hardware could offer limited branching but nothing responsive. Even early smartphone guides were basically MP3 players with a map.

AI removes that constraint. The technology now exists to meet each visitor where they are, moment by moment. Passive when they're tired, suggestive when they're open, conversational when they're hooked. The same guide, the same tour, used differently depending on how much energy someone has left.

The museums that design for this, that build tours expecting visitors to move between tiers, will see longer visits, better satisfaction scores, and fewer people leaving with headphone-shaped indentations and nothing memorable to show for them.

If you're rethinking how your audio guide handles visitor engagement and fatigue, we'd be glad to show you how the three-tier model works in practice.