Most temporary exhibitions never get an audio guide. The math doesn't work: a three-month show can't justify the $15,000-plus it costs to script, record, and produce a traditional guide. By the time the audio is ready, the exhibition might be half over. So museums skip it, and visitors walk through temporary shows with less context than the permanent collection next door.

That's a real loss. Temporary exhibitions are often the most ambitious, most topical programming a museum does. They draw new audiences, respond to cultural moments, generate press. They're the exhibitions that would benefit most from guided interpretation, and the ones least likely to get it.

AI-generated audio guides change the equation completely. When content isn't recorded but generated in real time, adding or removing material stops being a production project. It becomes an edit.

Why temporary shows get left out

The traditional audio guide production pipeline is built for permanence. You draft scripts, get curatorial sign-off, hire a voice actor, record, edit, localize, and distribute. Each stop might cost $500 to $1,500 when you add it all up. The process assumes the content will be in place for years, amortizing that cost over thousands of visitors.

Temporary exhibitions break every assumption in that model.

A show running for 12 weeks might attract 50,000 visitors. At those numbers, a $30,000 audio guide costs $0.60 per visitor, before anyone opts in. If adoption is 10%, the effective cost per user jumps to $6. For a smaller institution with a 6-week show, the numbers get worse fast.

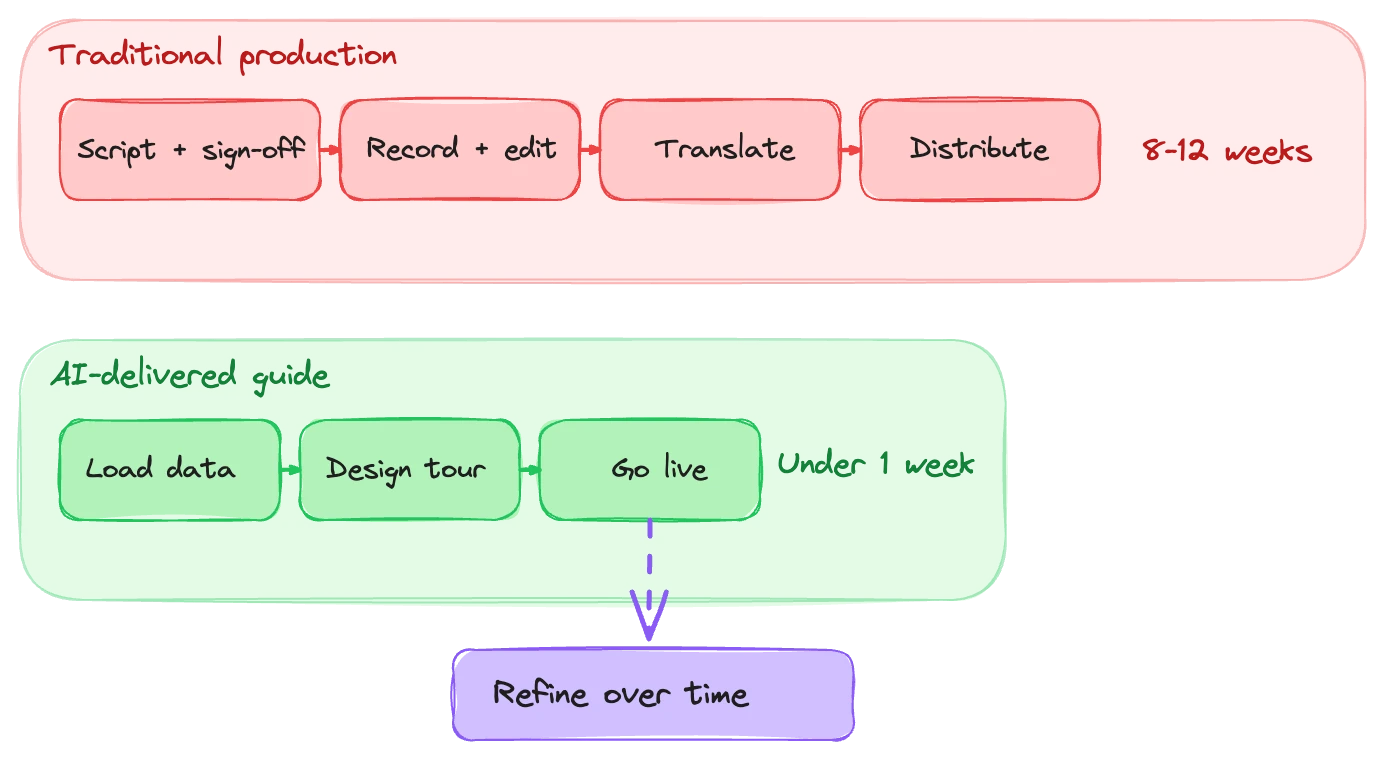

Then there's the timeline. Traditional production takes 8 to 12 weeks. You'd need a finished, locked exhibition checklist months before opening, exactly when curators are still making changes. Late-arriving loans, last-minute wall moves, a piece that doesn't clear conservation in time. Any of these invalidates a pre-recorded guide, and re-recording isn't cheap or quick.

The result is predictable: temporary exhibitions get a printed handout, maybe a few wall panels, and a hope that visitors figure it out on their own.

What changes with AI-generated guides

An AI-powered audio guide doesn't run from recordings. It generates speech in real time from your collection data, shaped by the persona and instructions you've set up. So "adding content" isn't a production step. It's a data entry task.

In practice, the process looks like this: you load the exhibition data (artist bios, object descriptions, curatorial notes, catalogue essays, whatever you have) into the system. You assign items to a tour and set the order. The guide already has a persona, your museum's voice, calibrated once and reused. It speaks about the new material the same way it speaks about everything else.

Adding one more item to an existing tour? One click. The guide picks it up, knows where it fits, and speaks about it in the voice and style you've already defined. No new script. No recording session. No revision cycle.

Want more control over specific stops? You can add per-stop instructions, telling the guide to mention a particular provenance story, or to open with a question about what visitors notice first. But these are optional refinements. The baseline quality without them is already high because the guide draws on your data and your persona, not a generic template.

We think of this as the 80/20 principle applied to exhibition interpretation. You get 80% of the way to an excellent guide with very little effort. The remaining 20%, fine-tuned per-stop instructions, sound design, special transitions, is there when you want it. But the exhibition doesn't have to wait for it.

Starting small and building up

One pattern we see often: a museum opens a temporary exhibition with basic audio guide coverage. The main items are in the system, the tour order is set, and the guide works. Then, over the first few weeks, staff add depth. Maybe the education team writes per-stop instructions for the five most popular pieces after seeing which ones visitors linger at. Maybe the curator adds context for an object that's generating a lot of questions.

The traditional model requires everything to be finished before day one. With AI generation, you can launch with what you have and improve as you learn what visitors actually want. Content changes are a normal part of the exhibition lifecycle, not an emergency that requires a production budget.

We've done complete museum onboardings, not just a single exhibition but an entire institution with thousands of items, in less than a week. A temporary show with 40 or 60 objects is a fraction of that effort.

Updates don't destroy previous work, either. Instructions and content are stored separately in the system. If you update an object's description (say, new research comes in mid-exhibition) your per-stop instructions and tour tweaks persist. You're not overwriting a finished product. You're editing a living one.

Travelling exhibitions across venues

Travelling exhibitions have an extra problem that traditional audio guides can't solve well. A show that moves from Berlin to Tokyo to New York needs interpretation at each venue. With recorded guides, that means three separate productions, with different acoustics, floor plans, languages, and budgets. Most travelling shows just punt on audio guides entirely, or each venue independently decides whether the investment makes sense.

With an AI system, the exhibition content and tour logic are portable. The curatorial narrative, the object data, the persona: all of it transfers. What changes between venues is navigation. Which room is the show in, what's the floor plan, where are the exits. Those are configuration changes, not content changes.

At Musa, we support rearranging content in tours and on floor plans without touching the interpretive material itself. A travelling exhibition can set up its guide once, then adjust the spatial layer at each new venue. The guide tells visitors where to go next based on the local layout while delivering the same curatorial narrative everywhere.

The language problem goes away too. If the guide already supports 40+ languages (which AI generation handles natively), each venue gets multilingual support without commissioning local translations. The show arrives with its guide ready to go.

The permanent collection benefits too

The same flexibility applies whenever a museum rearranges, rotates, or updates its permanent collection. Not just temporary exhibitions. Gallery refreshes that move 20 objects to different rooms. A wing that closes for renovation, redistributing key pieces across the building. New acquisitions that need to be woven into existing tours.

In a traditional setup, each of these changes means re-recording. Realistically, most museums just let the audio guide get progressively out of sync with what's actually on the walls. We've heard from institutions still using audio guides that reference objects moved three years ago.

When the guide generates from live data, it's always current. Move an object from Gallery 3 to Gallery 7 in the system, and the guide routes visitors to the right place. Remove an object on loan, and it drops out of the tour without leaving a gap.

What "one click" actually means

I want to be specific about what adding a new item involves, because "easy" is vague and every product claims it.

Basic addition: You add the object data (title, artist, date, description, any relevant context). You place it in a tour. The guide can now speak about it. Takes minutes, not hours. No script review, no voice recording, no localization step. The guide speaks about the new item in every supported language automatically, using the persona and voice you've already set.

Refined addition: You write per-stop instructions, maybe two or three sentences telling the guide what to emphasize, what question to ask, or what connection to draw to nearby objects. You might add ambient sound or adjust the stop's position in the tour narrative. More like 15 to 30 minutes of curatorial thought. Still not a production process.

Advanced addition: You design specific sound cues, record an audio excerpt to intersplice, or write detailed instructions for how the guide should handle follow-up questions about a particular piece. The most labor-intensive option, and entirely optional. Most items don't need it.

The point is that the floor for quality is high and the ceiling is higher. You choose how much effort to invest per item, and you can upgrade any item later without affecting anything else.

Making the case internally

If you need to justify this to a director or board, the argument is straightforward. Traditional audio guides for temporary exhibitions cost too much per visitor, take too long to produce, and can't adapt to exhibition changes. As a result, most temporary shows don't get audio guide coverage at all.

With AI-generated guides, temporary exhibitions get the same interpretive quality as the permanent collection. The marginal cost of covering a temporary show is low because the infrastructure already exists. The time to launch is days, not months. And the guide improves over the run of the exhibition rather than decaying from day one.

Forget the technology angle. It's a visitor experience argument. The people who come specifically for your temporary exhibition, often your most engaged, most willing-to-pay audience, deserve interpretation that matches the ambition of the programming.

Getting started

If your temporary exhibitions currently go without audio guide support because the economics don't make sense, that constraint no longer applies. If you're spending months producing guides that are outdated before opening day, that process can be dramatically shorter.

We've helped museums go from zero to a working guide in under a week, including ones with large collections. A temporary show is a natural starting point: lower stakes, clear timeline, and a chance to see how the system handles real visitors before committing to a broader rollout.

If that sounds relevant to what you're working on, let's talk through the specifics.